ML 7: De-noising Auto Encoders

- Published on

- ∘ 33 min read ∘ ––– views

Previous Article

Next Article

Continuing the revival of the Machine Learning series, this post serves as a deep dive into the mechanics of De-noising Auto-Encoders. It assumes a rough understanding of how auto-encoders work, but for the most part we can treat that architecture as a blackbox and just focus on that de-noising prefix.

Noise, with respect to images, refers to variations in pixel data which can be caused by any number of things like imperfections in the imaging device, I/O corruption, or just the weathering the sands of time. Real world noise is often modeled as Gaussian or Poisson signals – each of which has some nice properties that can be exploited by computers. For example, Gaussian noise is additive, meaning that for some input vector , we can construct a noisy version of it, denoted by simply adding a noisy vector comprised of samples from a normal distribution to it:

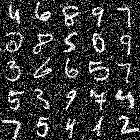

such that, regardless of the underlying pixel data represented by , the noisy image will be uniformly distorted, like so:

Gaussian noise, like any other Gaussian, is parameterized by corresponding to the mean and standard deviation of the distribution, where a smaller standard deviation (or a tighter distribution) corresponds to a smaller amount of noise:

In this post, we're primarily interested in methods of removing noise using Auto-Encoders.

Auto-Encoders

The typical Auto-Encoder architecture resembles the following:

In the case of a De-noising Auto-Encoder, our input will consist of noisy data (images), and our desired output will be less-noisy outputs. To compute the loss function required to train the network, we can get away with just measuring the Mean Squared Error between the cleaned version of our input and its commensurate de-noised output:

This implies a requirement for paired training data of the shape:

– that is, cleaned training images and corrupted samples thereof. This is all pretty straightforward stuff (as far as AEs go), so in this post I want to specifically try to better understand the function which is approximated by our De-noising Auto Encoder which maps noisy images to non-noisy ones.

Manifold Hypothesis

If we consider the projection of high-dimensional pixel data on the plane, it should be intuitively obvious that most of the points in this space are occupied by what we'd consider to be meaningless "noisy" images (sampling a random point in the space of all 28x28 images will most likely result in hot trash salt & pepper), and the subspace of semantically meaningful points (28x28 configurations of pixels that resemble shapes, letters, numbers, etc.) would be comparatively sparse:

We might describe this relationship by saying that the set of images representing e.g. numbers lies on a lower-dimensional manifold embedded in the much larger space of all possible image configurations:

This is known as the manifold hypothesis.2

Let's take these three numerical images and assume they're in our dataset. When we add noise to them, the noise perturbs the points along/away from the manifold, causing them to drift away from our low dimensional structure:

Depending on the amount of noise, the images might still closely resemble the originals, or otherwise contain enough semantic information to be recognizable. Our de-noiser, then, is the transformation which maps noisy images back onto the manifold:

And in doing so, the network not only removes noise, but it learns the structure of the manifold itself as well.

Minimum Mean Squared Error

While this idea of projecting on a semantically meaningful manifold is neat in theory, is that actually what's happening? Mathematically, our de-noising neural network is modeled by:

together with the aforementioned MSE loss function which computes the difference between the clean data and the de-noised estimate:

Thus, training our network is equivalent to solving the optimization problem:

where is the collection of parameters (weights & biases) of our network. Alternatively, we can omit mention of these learnable parameters and instead just express the training process in terms of the function that out network approximates:

which is called the Minimum MSE Estimator, and is the which minimizes the righthand side of that equation. This is super convenient because MMSEs predate neural networks, deep neural networks, and modern ML by a good bit. They're comparatively well-understood, and one established property we can exploit is that the MMSE is the mean of the posterior distribution. That is:

or, in other words, our function estimates how likely a clean reconstruction is, given a noisy input which is precisely what we're trying to optimize for! To visualize what this means, lets suppose we have a set of data points, and that there is an underlying, unknown distribution governing this data set with a density function (the prior distribution):

Now, say we have a Gaussian-noisy observation .

The likelihood of is Gaussian since is corrupted by Gaussian noise. If we had access to the expression of the prior distribution governing our dataset, we could compute the exact posterior distribution :

From this posterior, we could then find the Maximum a Priori estimate (MAP), which is the image that is most likely given our noisy input. In practice, though, we almost never know the actual prior distribution and therefore we also don't know the posterior either. Hence the need for estimators in the first place. However, as noted above, our neural network can be trained to learn the mean of the posterior distribution:

So, the neural network does implicitly learn some information about the prior distribution, even though it doesn't have access to it. We can take this a step further to gain additional intuition about what exactly our neural network is learning to further compare the manifold hypothesis to empirical behavior, but first we need to introduce some other terms and expressions used to model/approximate this behavior.

Scores

A score of a data distribution is the gradient of the log probability density function with respect to the data points:

Most of the time, this quantity is easier to estimate than the actual probability distribution function itself! Probability Distribution functions are frequently expressed as partials normalized by the integral over all partials.3

Computing is often times intractable, especially for high dimensional data. However, we can take the logarithm and differentiate with respect to , and the problematic normalizing constant conveniently vanishes:

We can visualize what the score actually measures by plotting a bunch of points sampled from a two-dimensional Gaussian, as well as the level curves of the probability distribution function. Take the earlier example sample:

The score function of this distribution forms a vector field where larger arrows corresponds to the score. Like in golf, we want a low score – magnitude of a score is larger when a point is further away from the mean:

This sort of flow field provides a natural motivation for gradient descent towards the mean of our prior distribution. To exploit the score, we must first understand how it's related to the MMSE de-noiser.

"Recall" :middle_finger:

Recall from probability theory that, given two independent random variables we can explicitly compute their sum as the convolution4 of the density functions:

In our context, this means that adding Gaussian noise to a clean dataset is equivalent to convolving the density of the data distribution with a Gaussian filter:

We can visualize this by considering a few data points (blue), as well as several more data points obtained by adding Gaussian noise to the clean ones:

The spread of these new points would be proportional to the standard deviation of the Gaussian noise distribution, e.g. if we double the value of from the above distribution we get:

This (hopefully) helps illustrate how Gaussian noise just blurs the distribution of the underlying data, and after some arbitrary amount of noise is added, the data distribution just becomes an indiscernible blob. However, introducing the notion of distribution score allows us to explicitly model our network:

which is known as Tweedie's formula,5 and originates from correspondence between the physicist/statistician to a colleague, Herbert Robbins, which he later referenced at a conference crediting Tweedie. In its original form, it tied the mean of the posterior to the score of some noisy data:

but as established above, approximates the posterior mean, which itself is a blurred instance of the true distribution. Thus, our network just moves the input to areas of with a higher density of lower scores, which is equivalent to mapping points onto their manifolds!

Below is an implementation of a DAE:

import torch

import torch.optim as optim

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torchvision import transforms

from torchvision.datasets import MNIST

from torchvision.utils import save_image

class DenoisingAutoEncoder(nn.Module):

def __init__(self):

super(DenoisingAutoEncoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(784, 128),

nn.ELU(),

nn.Linear(128, 64),

nn.ELU(),

nn.Linear(64, 10),

)

self.decoder = nn.Sequential(

nn.Linear(10, 64),

nn.ELU(),

nn.Linear(64, 128),

nn.ELU(),

nn.Linear(128, 784),

nn.Sigmoid()

)

def forward(self, x):

x = x + torch.randn_like(x) # add noise

return self.decoder(self.encoder(x))

def encode(self, x):

return self.encoder(x)

def decode(self, x):

return self.decoder(x)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

max_episodes = 100

batch_size = 128

η = 1e-3

# load dataset from MNIST, save to ../datasets

dataset = MNIST('../datasets', transform=transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

DAE = DenoisingAutoEncoder()

optimizer = optim.Adam(DAE.parameters(), lr=η)

for episode in range(max_episodes):

for data in dataloader:

img, _ = data

img = img.view(img.size(0), -1) # unpack image to a 1d vector

output = DAE(img)

loss = F.MSELoss(output, img)

optimizer.zero_grad()

loss.backward()

optimizer.step()

Langevin's Algorithm

In the above implementation, we can see that the total loss during training is given by:

and as increases, converges on the expected difference between the de-noised estimate and the reference labels conditional on the noisy input :

Recall that our trained DAE approximates the mean of the posterior distribution , given the noisy input . We can derive this result by expressing this L2 norm in the expectation as a dot product:

And, since the DAE minimizes this term, it's derivative w.r.t. should be :

so, we can see that our/any MMSE estimator will approximate the posterior mean for a noisy input . We can develop further intuition about this with a 1 dimensional example; suppose we have some prior distribution , as well as a noisy observation . For initial simplicity, we'll choose to be normally distributed, but that's rarely the case in practice:

Using these two densities, we can plot the posterior distribution which corresponds to how likely the reconstruction is given the noisy observation :

The posterior distribution takes into account both the actual underlying distribution given by the prior, as well as the likelihood describing how noise effects the observation. As we can see in this simplistic example, the most likely reconstruction lies between the two distributions, and the point where the posterior is maximized is called the maximum a posteriori estimate, or MAP. In this example, the mean of the posterior distribution coincides with the MAP, but for more complex/realistic distributions, it is possible to have multiple local maxima such that these the mean and MAP are not the same. E.g. for a more complex, mixed Gaussian prior, we can see how both downstream distributions are affected:

Which means that our DAE will output reconstructions which are good on average, but may not always be optimal for individual observations since the mean of the posterior no longer coincides with either local maxima. Revisiting Tweedie's formula to see how the posterior mean is connected to the score, we can account for, and correct, this disparity:

Let's break this expression down further.

1. Marginalization

We can marginalize the noisy distribution by expressing it in terms of the likelihood of observing :

Then we can take the gradient w.r.t. on both sides:

and, with some assumptions about the regularity of the distribution,6 we can move the gradient inside the integral on the right hand side:

which illustrates that the gradient of directly depends on the gradient of the likelihood.

2. Explicit Form of Gaussian Likelihood

Recall that, in the case of the Gaussian, likelihood of a conditional observation is proportional to the magical Gaussian expression:

Differentiating that expression, we get:

substituting this into our expression for , we get:

3. Tidying up the Integral

Since the integral is linear, we can decompose it into the combination of the marginalization of and Bayes identity:

which is Tweedie's formula!

Two-dimensional Tweedie's

Let's illustrate this again, for two images in our training set projected on the the plane:

We have our points, as well as some Gaussian samples distributed about them with local maxima at the two reference points.

Each of the orange points here represents a noisy version of whatever the nearest true data point is. Each of these noisy points also follows an underlying density, which depends on the original distribution of images and the noise level applied. This distribution:

is the one we're approximating with our network according to the hope that:

Tweedie's displaces the noisy input in the direction of the density of the real data. So, no matter what input image we start with, Tweedie's will point us in the direction of the closest region where real data density is high.

Approximation is Hard!

As it turns out through, estimating is actually considerably difficult. Today, we use NNs to de-noise images, but those techniques haven't always been available. What we now call "de-noising score matching" was only formally introduced in 2010 by Pascal Vincent in "A connection between Score Matching and DAE."7 Only in the past few years, following the publication of a series of papers on score-based generative models8 has de-noising + score matching become popular (following the rise in relevance/popularity of diffusion models and all the AI slop/R&D that they've brought with them) since generative diffusion models share the same objective function as DAEs.

A naive approach to the problem of score matching might be to directly optimize for the score function of the data distribution:

However, in most real world scenarios, we don't know the true density function of our data. So what can we do? In 2005, Aapo Hyvärinen reformulated the problem into one of minimizing an objective function which doesn't rely on ground truth whatsover:9

This works with the trace10 of the Jacobian11 of the score function and the norm of the score itself. This result was groundbreaking because it offered a theoretical mechanism for approximating any distribution as long as we can sample from it! Of course, this technique is not without its own drawbacks in application though – namely: the computational cost of computing the Jacobian and its trace in high dimensions (that's a lotta derivatives).

On the other hand, de-noising score matching conveniently avoids this issue by relying on adding Gaussian noise to images and computing a lot of L2 distances which are comparatively affordable operation in terms of computation complexity:

Langevin's

Finally, we can look at how to use estimated score to compute de-noised images. Revisiting the earlier example plot of mixed Gaussians:

We have same data samples, and knowledge that the underlying distribution is a mixture of Gaussians. We've also trained a network to approximate the score function of this distribution:

which gives us the direction of maximal probability at any point in space. Taking steps in the direction of maximum likelihood of a minimum score will result in de-noised outputs. We express this as a process of gradient descent on the score of the distribution:

Except, instead of using the real score, we use the approximation provided by the network:

The catch, which is generally applicable to most deep learning problems, is that direct gradient descent won't generate diverse samples. It will always converge on a local maxima of the same output points, no matter what our initial conditions are:

To introduce output diversity, we add a small stochastic, Gaussian noise perturbation to each update, scaled by the step size:

This effectively prevent converge to a single point, even after many steps. Instead, we converge on a high density region of low score. This is called Langevin's algorithm, and we can use it to generate several output images from randomly sampled initially conditions which will –instead of converging on singular local minima– will approximate the entire Gaussian cluster.

Fortunately, this requires minimal modifications to the training loop of our implementation, and we can further simplify it by leveraging existing models:

import torch

import deepinv

from torchvsion import datasets, transforms

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

n_epochs = 10

η = 1e-3

σ = 0.1

train = datasets.MNIST(train=True, transform=transforms.ToTensor(), download=True)

dataloader = torch.utils.data.DataLoader(

train, batch_size=64, num_workers=6, shuffle=True

)

physics = deepinv.physics.Denoising(

noise_model=deepinv.physics.GaussianNoise(σ)

).to(device)

model = deepinv.models.DRUnet(in_chanels=1, out_channels=1, device=device)

loss = deepinv.loss.metric.MSE(train_loss=True)

optim = torch.optim.Adam(model.parameters(), lr=η)

for epoch in range(n_epochs):

running_loss = 0.0

for batch_idx, (data, _) in enumerate(dataloader):

data = data.to(device)

optim.zero_grad()

x_noisy = physics.forward(data)

x_reconstruction = model(x_noisy, σ)

loss_value = loss(x_reconstruction, data).mean()

loss_value.backwards()

optim.step()

running_loss += loss_value.item()

avg_loss = running_loss / len(dataloader)

print(f"{epoch}/n_epochs: loss = {avg_loss:.4f}")

And, to implement Langevin's to generate new samples, we simply take a clean sample, add noise to it, compute the approximate score, and add it to our true sample:

n_steps = 5000

step_size = 0.001 * σ**2

true_x = dataloader.dataset[0][0].unsqueeze(0).to(device) # unpack img to vector

x_sample = true_x + 0.5 * torch.randn_like(x_sample)

with torch.no_grad():

for t in range(n_steps):

grad = (model(x_sample, σ) - x_sample) / σ**2

x_sample = (

x_sample + step_size * grad

+ torch.randn_like(x_sample) * np.sqrt(step_size)

)

which produces distinct de-noised reconstructions! We can attempt to introduce further output diversity by starting from pure noise rather than noisy samples. A problem we encounter though occurs when our initial conditions correspond to a region with no samples implying that we don't have a reliable estimate of score:

The distribution score is so inaccurate in these regions that Langevin's will never discover better regions with reliable score. One possible work around could be to boost and smooth the score so that it covers a larger area or hyper-volume in the space:

Again, though, this comes with its own drawbacks since while increasing the noise levels can fill in the gaps making the score less precise in all regions, not just low-density regions. This problem is precisely what diffusion models aim to fix.

Footnotes

Footnotes

The hypothesis itself vastly predates deep learning, but a good post on its relevance to this context can be found here: https://colah.github.io/posts/2014-03-NN-Manifolds-Topology/ ↩

A partial w.r.t. a PDF yields the probability of that variable occurring regardless of the values of the other variables. ↩

Fancy probability theory speak for the sum of multiple independent random variables' distribution functions. ↩

Robbins, Herbert E. (1992), Kotz, Samuel; Johnson, Norman L. (eds.), "An Empirical Bayes Approach to Statistics", Breakthroughs in Statistics, Springer Series in Statistics, New York, NY pp. 388–394. ↩

our PDF is smooth and differentiable on the interval, dominated convergence holds, and the integral converges. ↩

Vincent, Pascal. "A Connection Between Score Matching and Denoising Autoencoders." University of Montreal, 2010. ↩

Song, Yang. "Generative Modeling by Estimating Gradients of the Data Distribution." yang-song.net. 2021. ↩

Hyvärinen, Aapo. "Estimation of non-normalized statistical models by score matching." Journal of Machine Learning Research, 2005. ↩

The scalar sum of eigenvalues of a square matrix ↩

a matrix containing all the first-order partial derivatives of a vector-valued function ↩